Focused Crawling

What is a focused crawler? The definition from Wikipedia says:

A focused crawler or topical crawler is a web crawler that attempts to download only web pages that are relevant to a pre-defined topic or set of topics. Topical crawling generally assumes that only the topic is given, while focused crawling also assumes that some labeled examples of relevant and not relevant pages are available.

The advantages to a focused crawler are that you spend less time, money & effort processing web pages that are unlikely to be of value. Subsequently it takes less time for the crawler to find areas in the web which are “target rich”, in that they have a higher density of desirable, high value pages.

Scale Unlimited Focused Crawling

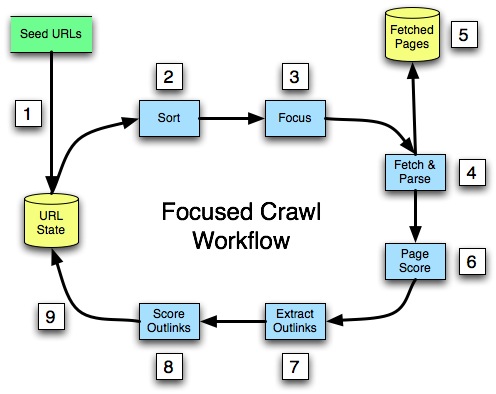

The best way to explain how Scale Unlimited handles focused crawling is with a diagram…

It helps to start off with some terminology, before diving into the actual steps of the workflow.

- URL State database This database (often called a “CrawlDB”) maintains one entry for each unique URL, along with status (e.g. “have we fetched this page yet?”), the page score of fetched pages, and the link score. The actual approach used for this database varies, depending on the scale of the crawl, the availability of a scalable column-based DB or key/value store, etc.

- Page score Every fetched page is processed by a page scorer. This calculates a numeric value for the page, where higher values correspond to pages that are of greater interest, given the focus of the crawl. A page scorer can be anything from a simple target term frequency calculation to a complex NLP (natural language processing) analyzer.

- Link score Every URL has a score that represents the sum of page scores from all pages which contain an outbound link that matches the URL. Page scores are divided up across all outbound links, thus the increase in a URL’s link score from a page with many outbound links will be minimized.

- Fetched Pages database This is where all fetched pages are stored, using the URL as the key. Typically this isn’t a real database, but rather an optimized, compressed read-only representation.

With terminology out of the way, we can discuss the steps in a focused crawl workflow.

- The first step is to load the URL State database with an initial set of URLs. These can be a broad set of top-level domains such as the 1.7 million web sites with the highest US-based traffic, or the results from selective searches against another index, or manually selected URLs that point to specific, high quality pages.

- Once the URL State database has been loaded with some initial URLs, the first loop in the focused crawl can begin. The first step in each loop is to extract all of the unprocessed URLs, and sort them by their link score.

- Next comes one of the two critical steps in the workflow. A decision is made about how many of the top-scoring URLs to process in this loop. The fewer the number, the “tighter” the focus of the crawl. There are many options for deciding how many URLs to accept – for example, based on a fixed minimum score, a fixed percentage of all URLs, or a maximum count. More sophisticated approaches include picking a cutoff score that represents the transition point (elbow) in a power curve.

- Once the set of accepted URLs has been created, the standard fetch process begins. This includes all of the usual steps required for polite & efficient fetching, such as robots.txt processing. Pages that are successfully fetched can then be parsed.

- Typically fetched pages are also saved into the Fetched Pages database.

- Now comes the second of the two critical steps. The parsed page content is given to the page scorer, which returns a value representing how closely the page matches the focus of the crawl. Typically this is a value from 0.0 to 1.0, with higher scores being better.

- Once the page has been scored, each outlink found in the parse is extracted.

- The score for the page is divided among all of the outlinks.

- Finally, the URL State database is updated with the results of fetch attempts (succeeded, failed), all newly discovered URLs are added, and any existing URLs get their link score increased by all matching outlinks that were extracted during this loop.

At this point the focused crawl can terminate, if sufficient pages of high enough quality (score) have been found, or the next loop can begin.

In this manner the crawl proceeds in a depth-first manner, focusing on areas of the web graph where the most high scoring pages are found.